Difference between revisions of "Sanger's rule"

(Created page with "'''Sanger's rule''', developed by American neurologist Terence Sanger in 1985, is a version of Oja's rule which forces neurons to represent a well-ordered set of principal...") |

|||

| Line 14: | Line 14: | ||

\end{bmatrix}</math></center> | \end{bmatrix}</math></center> | ||

| − | where <math>w_{ij}</math> is the weight between input <i>i</i> and neuron <i>j</i> | + | where <math>w_{ij}</math> is the weight between input <i>i</i> and neuron <i>j</i>, the output of the set of neurons is defined as follows: |

<center><math>\vec{y} = \mathbf{W}^T \vec{x}</math></center> | <center><math>\vec{y} = \mathbf{W}^T \vec{x}</math></center> | ||

Revision as of 18:09, 4 April 2012

Sanger's rule, developed by American neurologist Terence Sanger in 1985, is a version of Oja's rule which forces neurons to represent a well-ordered set of principal components of the data set.[1]

Model

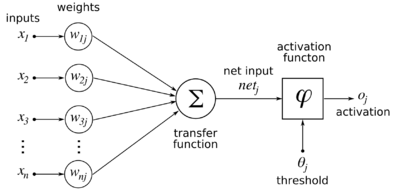

We use a set of linear neurons. Given a set of k-dimensional inputs represented as a column vector <math>\vec{x} = [x_1, x_2, \cdots, x_k]^T</math>, and a set of m linear neurons with (initially random) synaptic weights from the inputs, represented as a matrix formed by m weight column vectors (i.e. a k row x m column matrix):

w_{11} & w_{12} & \cdots & w_{1m}\\ w_{21} & w_{22} & \cdots & w_{2m}\\ \vdots & & & \vdots \\ w_{k1} & w_{m2} & \cdots & w_{mk}

\end{bmatrix}</math>where <math>w_{ij}</math> is the weight between input i and neuron j, the output of the set of neurons is defined as follows:

Sanger's rule gives the update rule which is applied after an input pattern is presented:

Sanger's rule is simply Oja's rule except that instead of a subtractive contributution from all neurons, the subtractive contribution is only from "previous" neurons. Thus, the first neuron is a purely Oja's rule neuron, and extracts the first principal component. The second neuron, however, is forced to find some other principal component due to the subtractive contribution of the first and second neurons. This leads to a well-ordered set of principal components.

References

- ↑ Script error: No such module "Citation/CS1".