Oja's rule

From Eyewire

Oja's rule, developed by Finnish computer scientist Erkki Oja in 1982, is a stable version of Hebb's rule.[1]

Model

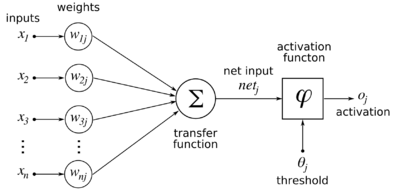

As with Hebb's rule, we use a linear neuron. Given a set of k-dimensional inputs represented as a column vector

Error creating thumbnail: Unable to save thumbnail to destination

, and a linear neuron with (initially random) synaptic weights from the inputs Error creating thumbnail: Unable to save thumbnail to destination

the output the neuron is defined as follows:

Error creating thumbnail: Unable to save thumbnail to destination

Oja's rule gives the update rule which is applied after an input pattern is presented:

Error creating thumbnail: Unable to save thumbnail to destination

Error creating thumbnail: Unable to save thumbnail to destination

ignored for n>1 since η is small.

It can be shown that Oja's rule extracts the first principal component of the data set. If there are many Oja's rule neurons, then all will converge to the same principal component, which is not useful. Sanger's rule was formulated to get around this issue.

References

- ↑ Oja, Erkki (November 1982). "Simplified neuron model as a principal component analyzer". Journal of Mathematical Biology 15 (3): 267–273