Difference between revisions of "Conditional principal components analysis"

(→Interpretation) |

|||

| Line 65: | Line 65: | ||

* If the output is active and an input is also active, add 1 minus the weight (times a learning rate). | * If the output is active and an input is also active, add 1 minus the weight (times a learning rate). | ||

| − | + | The second rule has the effect of driving the weight towards zero (asymptotically), and the third rule has the effect of driving the weight towards one (asymptotically). Overall, the rules combine to equilibrate the weight towards the probability that the output is active given that the input is active. | |

==References== | ==References== | ||

<references/> | <references/> | ||

Revision as of 15:55, 10 April 2012

Conditional principal components analysis seeks to restrict neurons to perform principal components analysis only when activated.[1]

Contents

Model

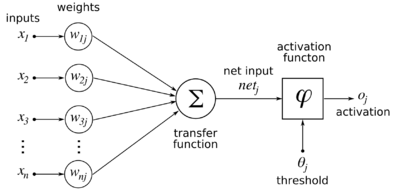

We use a set of linear neurons with binary inputs. Given a set of k-dimensional binary inputs represented as a column vector <math>\vec{x} = [x_1, x_2, \cdots, x_k]^T</math>, and a set of m linear neurons with (initially random) synaptic weights from the inputs, represented as a matrix formed by m weight column vectors (i.e. a k row x m column matrix):

w_{11} & w_{12} & \cdots & w_{1m}\\ w_{21} & w_{22} & \cdots & w_{2m}\\ \vdots & & & \vdots \\ w_{k1} & w_{k2} & \cdots & w_{km}

\end{bmatrix}</math>where <math>w_{ij}</math> is the weight between input i and neuron j, the output of the set of neurons is defined as follows:

The CPCA rule gives the update rule which is applied after an input pattern is presented:

With a set of such neurons, typically a k-Winner-Takes-All pass is run before the update: all neurons are evaluated, and the <math>k</math> neurons with the highest outputs have their outputs set to 1, while the rest have their outputs set to 0.

Derivation

We want the weight from input <math>i</math> to neuron <math>j</math> to eventually settle at the probability that neuron <math>j</math> will be activated given that input <math>i</math> is activated. That is, when the weight is at equilibrium, we have:

By the definition of conditional probability:

Using the total probability theorem, we can condition the numerator and denominator on the input patterns. If an input pattern is <math>t</math>, then we have:

P(y_j=1) &= \sum_t P(y_j=1 \mid t)P(t),\\ P(y_j=1 \wedge x_i=1) &= \sum_t P(y_j=1 \wedge x_i=1 \mid t)P(t)

\end{align}</math>Substituting back into the equation for <math>w_{ij}</math> and doing some rearrangement, we get:

A good assumption is that all input patterns in the set of input patterns are equally likely to appear, so that <math>P(t)</math> is a constant and can be eliminated:

Since inputs and outputs are either 0 or 1, conveniently the average over all patterns of an input or output (or a combination of input and output) will be equal to the probability of that input or output (or combination of input and output) being 1. Thus:

We can easily turn this into an update rule which will drive the weights to the above equilibrium condition:

Interpretation

Since the inputs and outputs are binary, the update rule can be interpreted as follows:

- If the output is not active, do not alter any weight.

- If the output is active and an input is not active, subtract the weight (times a learning rate).

- If the output is active and an input is also active, add 1 minus the weight (times a learning rate).

The second rule has the effect of driving the weight towards zero (asymptotically), and the third rule has the effect of driving the weight towards one (asymptotically). Overall, the rules combine to equilibrate the weight towards the probability that the output is active given that the input is active.